As language fashions proceed to develop in measurement and complexity, so do the useful resource necessities wanted to coach and deploy them. Whereas large-scale fashions can obtain outstanding efficiency throughout quite a lot of benchmarks, they’re usually inaccessible to many organizations as a consequence of infrastructure limitations and excessive operational prices. This hole between functionality and deployability presents a sensible problem, significantly for enterprises searching for to embed language fashions into real-time methods or cost-sensitive environments.

In recent times, small language fashions (SLMs) have emerged as a possible resolution, providing diminished reminiscence and compute necessities with out fully compromising on efficiency. Nonetheless, many SLMs battle to offer constant outcomes throughout numerous duties, and their design usually includes trade-offs that restrict generalization or usability.

ServiceNow AI Releases Apriel-5B: A Step Towards Sensible AI at Scale

To deal with these issues, ServiceNow AI has launched Apriel-5B, a brand new household of small language fashions designed with a give attention to inference throughput, coaching effectivity, and cross-domain versatility. With 4.8 billion parameters, Apriel-5B is sufficiently small to be deployed on modest {hardware} however nonetheless performs competitively on a variety of instruction-following and reasoning duties.

The Apriel household contains two variations:

- Apriel-5B-Base, a pretrained mannequin supposed for additional tuning or embedding in pipelines.

- Apriel-5B-Instruct, an instruction-tuned model aligned for chat, reasoning, and job completion.

Each fashions are launched underneath the MIT license, supporting open experimentation and broader adoption throughout analysis and industrial use circumstances.

Architectural Design and Technical Highlights

Apriel-5B was educated on over 4.5 trillion tokens, a dataset fastidiously constructed to cowl a number of job classes, together with pure language understanding, reasoning, and multilingual capabilities. The mannequin makes use of a dense structure optimized for inference effectivity, with key technical options resembling:

- Rotary positional embeddings (RoPE) with a context window of 8,192 tokens, supporting long-sequence duties.

- FlashAttention-2, enabling sooner consideration computation and improved reminiscence utilization.

- Grouped-query consideration (GQA), decreasing reminiscence overhead throughout autoregressive decoding.

- Coaching in BFloat16, which ensures compatibility with fashionable accelerators whereas sustaining numerical stability.

These architectural choices enable Apriel-5B to keep up responsiveness and velocity with out counting on specialised {hardware} or intensive parallelization. The instruction-tuned model was fine-tuned utilizing curated datasets and supervised methods, enabling it to carry out nicely on a variety of instruction-following duties with minimal prompting.

Analysis Insights and Benchmark Comparisons

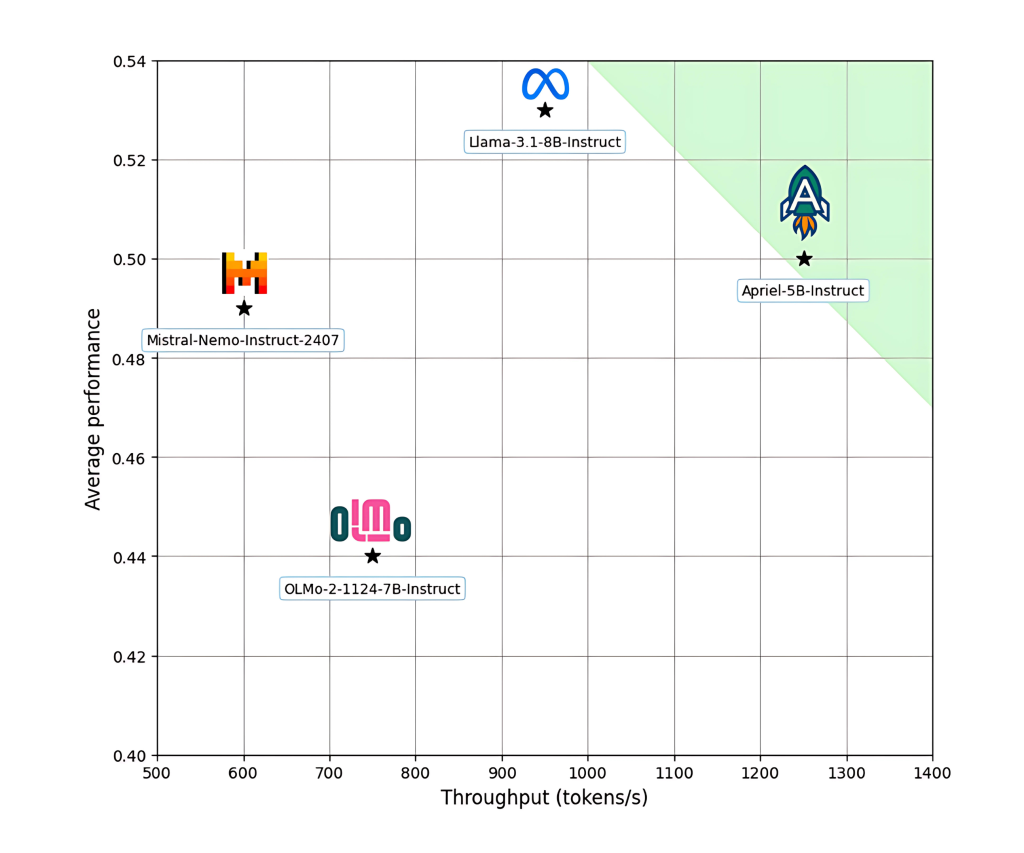

Apriel-5B-Instruct has been evaluated towards a number of extensively used open fashions, together with Meta’s LLaMA 3.1–8B, Allen AI’s OLMo-2–7B, and Mistral-Nemo-12B. Regardless of its smaller measurement, Apriel exhibits aggressive outcomes throughout a number of benchmarks:

- Outperforms each OLMo-2–7B-Instruct and Mistral-Nemo-12B-Instruct on common throughout general-purpose duties.

- Reveals stronger outcomes than LLaMA-3.1–8B-Instruct on math-focused duties and IF Eval, which evaluates instruction-following consistency.

- Requires considerably fewer compute assets—2.3x fewer GPU hours—than OLMo-2–7B, underscoring its coaching effectivity.

These outcomes counsel that Apriel-5B hits a productive midpoint between light-weight deployment and job versatility, significantly in domains the place real-time efficiency and restricted assets are key concerns.

Conclusion: A Sensible Addition to the Mannequin Ecosystem

Apriel-5B represents a considerate method to small mannequin design, one which emphasizes steadiness slightly than scale. By specializing in inference throughput, coaching effectivity, and core instruction-following efficiency, ServiceNow AI has created a mannequin household that’s straightforward to deploy, adaptable to assorted use circumstances, and overtly obtainable for integration.

Its robust efficiency on math and reasoning benchmarks, mixed with a permissive license and environment friendly compute profile, makes Apriel-5B a compelling alternative for groups constructing AI capabilities into merchandise, brokers, or workflows. In a subject more and more outlined by accessibility and real-world applicability, Apriel-5B is a sensible step ahead.

Try ServiceNow-AI/Apriel-5B-Base and ServiceNow-AI/Apriel-5B-Instruct. All credit score for this analysis goes to the researchers of this mission. Additionally, be happy to comply with us on Twitter and don’t neglect to affix our 85k+ ML SubReddit.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its reputation amongst audiences.