IBM has quietly constructed a powerful presence within the open-source AI ecosystem, and its newest launch exhibits why it shouldn’t be neglected. The corporate has launched two new embedding fashions—granite-embedding-english-r2 and granite-embedding-small-english-r2—designed particularly for high-performance retrieval and RAG (retrieval-augmented technology) programs. These fashions will not be solely compact and environment friendly but additionally licensed underneath Apache 2.0, making them prepared for industrial deployment.

What Fashions Did IBM Launch?

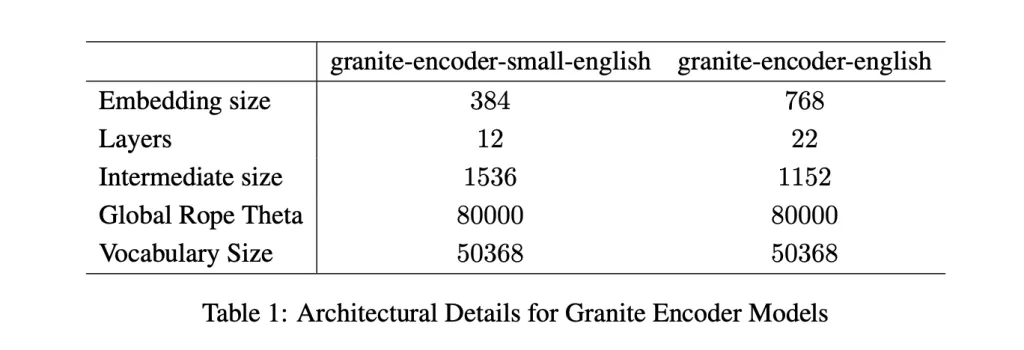

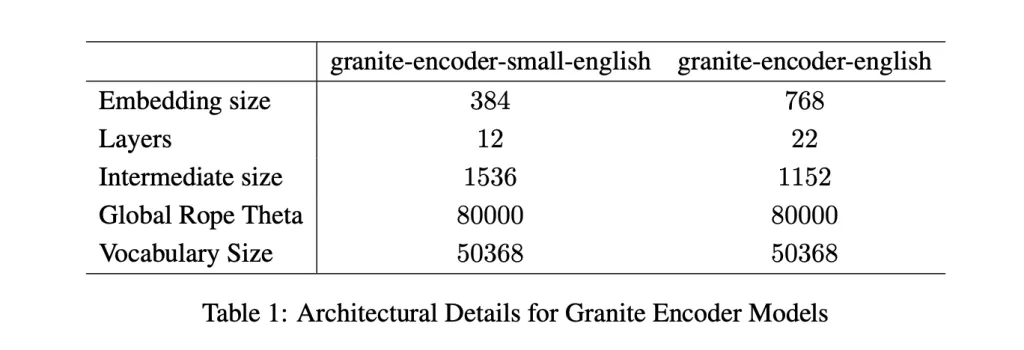

The 2 fashions goal completely different compute budgets. The bigger granite-embedding-english-r2 has 149 million parameters with an embedding dimension of 768, constructed on a 22-layer ModernBERT encoder. Its smaller counterpart, granite-embedding-small-english-r2, is available in at simply 47 million parameters with an embedding dimension of 384, utilizing a 12-layer ModernBERT encoder.

Regardless of their variations in dimension, each assist a most context size of 8192 tokens, a serious improve from the first-generation Granite embeddings. This long-context functionality makes them extremely appropriate for enterprise workloads involving lengthy paperwork and sophisticated retrieval duties.

What’s Contained in the Structure?

Each fashions are constructed on the ModernBERT spine, which introduces a number of optimizations:

- Alternating international and native consideration to stability effectivity with long-range dependencies.

- Rotary positional embeddings (RoPE) tuned for positional interpolation, enabling longer context home windows.

- FlashAttention 2 to enhance reminiscence utilization and throughput at inference time.

IBM additionally skilled these fashions with a multi-stage pipeline. The method began with masked language pretraining on a two-trillion-token dataset sourced from net, Wikipedia, PubMed, BookCorpus, and inner IBM technical paperwork. This was adopted by context extension from 1k to 8k tokens, contrastive studying with distillation from Mistral-7B, and domain-specific tuning for conversational, tabular, and code retrieval duties.

How Do They Carry out on Benchmarks?

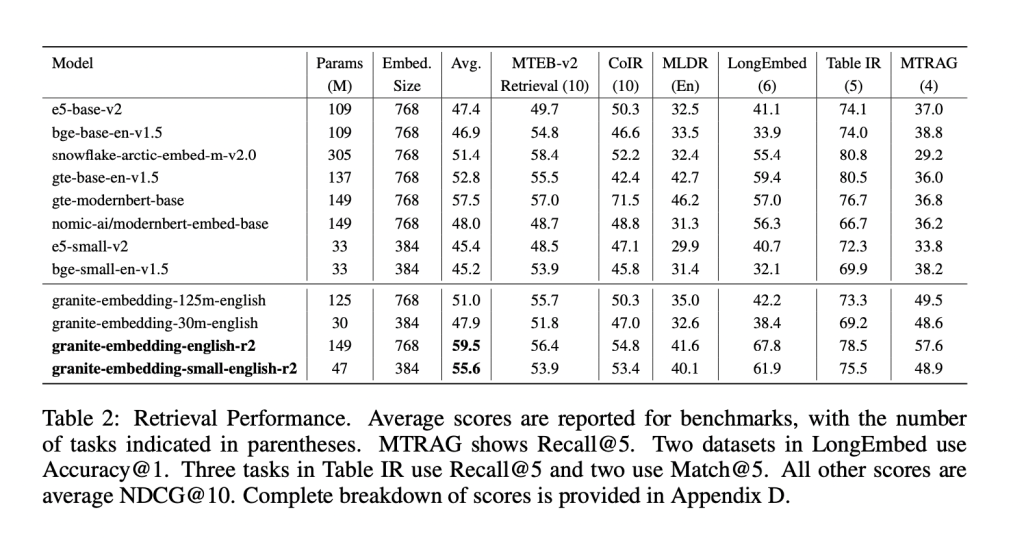

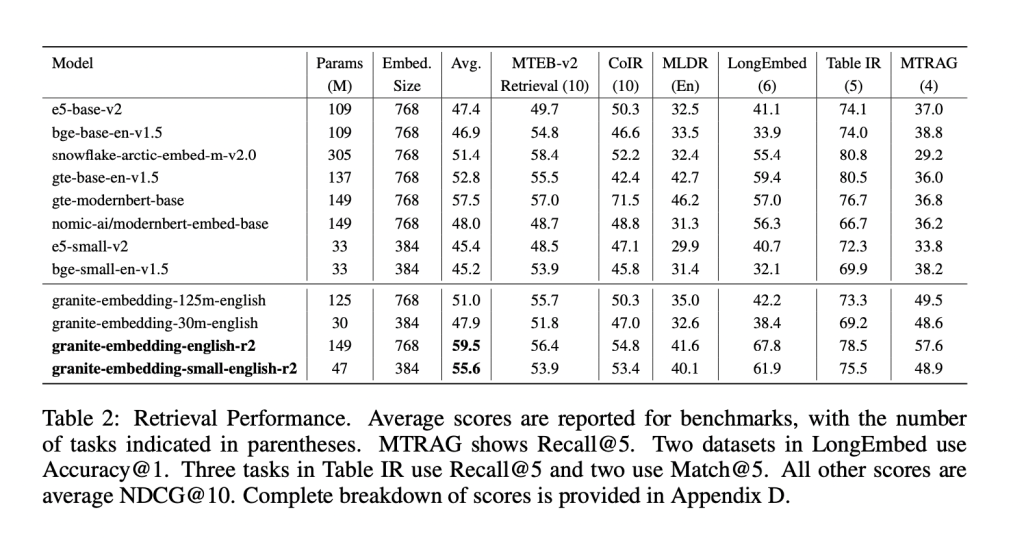

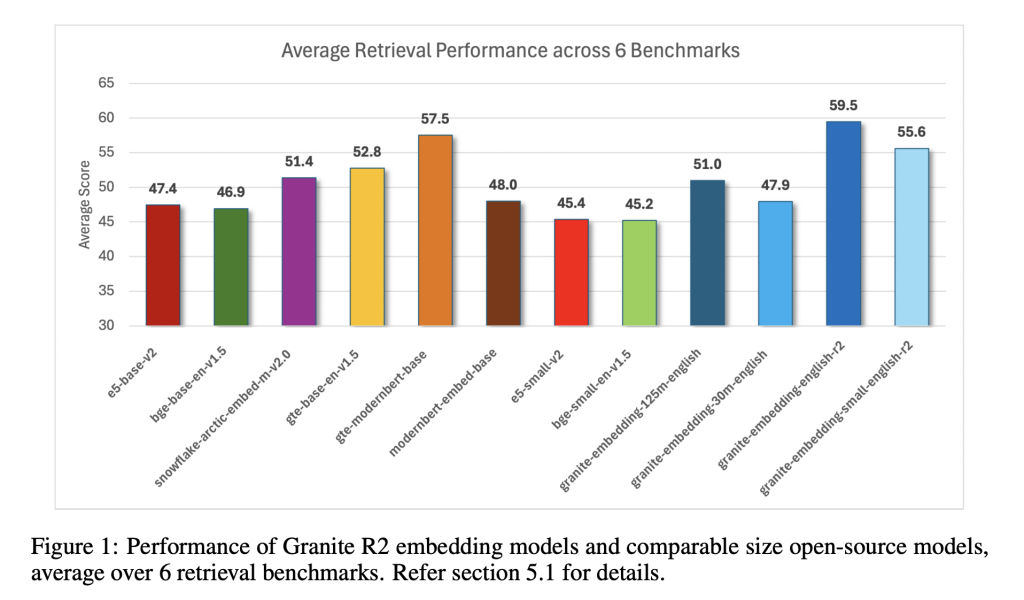

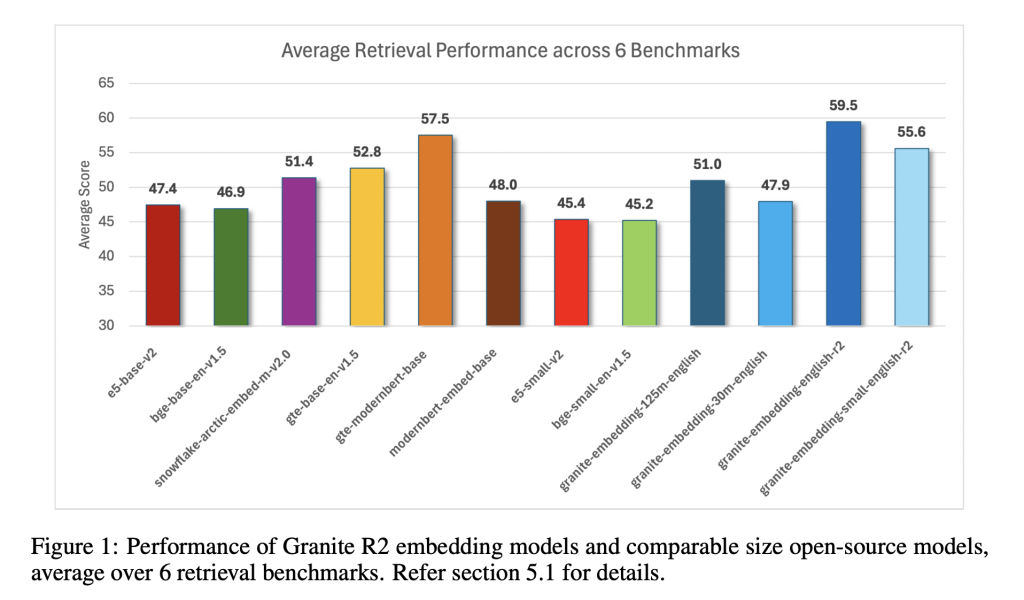

The Granite R2 fashions ship sturdy outcomes throughout extensively used retrieval benchmarks. On MTEB-v2 and BEIR, the bigger granite-embedding-english-r2 outperforms equally sized fashions like BGE Base, E5, and Arctic Embed. The smaller mannequin, granite-embedding-small-english-r2, achieves accuracy near fashions two to a few occasions bigger, making it significantly enticing for latency-sensitive workloads.

Each fashions additionally carry out effectively in specialised domains:

- Lengthy-document retrieval (MLDR, LongEmbed) the place 8k context assist is important.

- Desk retrieval duties (OTT-QA, FinQA, OpenWikiTables) the place structured reasoning is required.

- Code retrieval (CoIR), dealing with each text-to-code and code-to-text queries.

Are They Quick Sufficient for Giant-Scale Use?

Effectivity is likely one of the standout points of those fashions. On an Nvidia H100 GPU, the granite-embedding-small-english-r2 encodes practically 200 paperwork per second, which is considerably quicker than BGE Small and E5 Small. The bigger granite-embedding-english-r2 additionally reaches 144 paperwork per second, outperforming many ModernBERT-based options.

Crucially, these fashions stay sensible even on CPUs, permitting enterprises to run them in much less GPU-intensive environments. This stability of pace, compact dimension, and retrieval accuracy makes them extremely adaptable for real-world deployment.

What Does This Imply for Retrieval in Apply?

IBM’s Granite Embedding R2 fashions exhibit that embedding programs don’t want large parameter counts to be efficient. They mix long-context assist, benchmark-leading accuracy, and excessive throughput in compact architectures. For corporations constructing retrieval pipelines, information administration programs, or RAG workflows, Granite R2 gives a production-ready, commercially viable various to current open-source choices.

Abstract

In brief, IBM’s Granite Embedding R2 fashions strike an efficient stability between compact design, long-context functionality, and powerful retrieval efficiency. With throughput optimized for each GPU and CPU environments, and an Apache 2.0 license that permits unrestricted industrial use, they current a sensible various to bulkier open-source embeddings. For enterprises deploying RAG, search, or large-scale information programs, Granite R2 stands out as an environment friendly and production-ready choice.

Take a look at the Paper, granite-embedding-small-english-r2 and granite-embedding-english-r2. Be happy to take a look at our GitHub Page for Tutorials, Codes and Notebooks. Additionally, be at liberty to comply with us on Twitter and don’t overlook to affix our 100k+ ML SubReddit and Subscribe to our Newsletter.

Asif Razzaq is the CEO of Marktechpost Media Inc.. As a visionary entrepreneur and engineer, Asif is dedicated to harnessing the potential of Synthetic Intelligence for social good. His most up-to-date endeavor is the launch of an Synthetic Intelligence Media Platform, Marktechpost, which stands out for its in-depth protection of machine studying and deep studying information that’s each technically sound and simply comprehensible by a large viewers. The platform boasts of over 2 million month-to-month views, illustrating its recognition amongst audiences.