In right now’s quickly evolving technological panorama, builders and organizations usually grapple with a collection of sensible challenges. Some of the important hurdles is the environment friendly processing of various information varieties—textual content, speech, and imaginative and prescient—inside a single system. Conventional approaches have usually required separate pipelines for every modality, resulting in elevated complexity, increased latency, and better computational prices. In lots of functions—from healthcare diagnostics to monetary analytics—these limitations can hinder the event of responsive and adaptive AI options. The necessity for fashions that steadiness robustness with effectivity is extra urgent than ever. On this context, Microsoft’s latest work on small language fashions (SLMs) supplies a promising strategy by striving to consolidate capabilities in a compact, versatile package deal.

Microsoft AI has just lately launched Phi-4-multimodal and Phi-4-mini, the most recent additions to its Phi household of SLMs. These fashions have been developed with a transparent deal with streamlining multimodal processing. Phi-4-multimodal is designed to deal with textual content, speech, and visible inputs concurrently, all inside a unified structure. This built-in strategy implies that a single mannequin can now interpret and generate responses primarily based on diversified information varieties with out the necessity for separate, specialised methods.

In distinction, Phi-4-mini is tailor-made particularly for text-based duties. Regardless of being extra compact, it has been engineered to excel in reasoning, coding, and instruction following. Each fashions are made accessible through platforms like Azure AI Foundry and Hugging Face, making certain that builders from a variety of industries can experiment with and combine these fashions into their functions. This balanced launch represents a considerate step in the direction of making superior AI extra sensible and accessible.

Technical Particulars and Advantages

On the technical stage, Phi-4-multimodal is a 5.6-billion-parameter mannequin that includes a mixture-of-LoRAs—a technique that permits the combination of speech, imaginative and prescient, and textual content inside a single illustration area. This design considerably simplifies the structure by eradicating the necessity for separate processing pipelines. In consequence, the mannequin not solely reduces computational overhead but in addition achieves decrease latency, which is especially useful for real-time functions.

Phi-4-mini, with its 3.8-billion parameters, is constructed as a dense, decoder-only transformer. It options grouped-query consideration and boasts a vocabulary of 200,000 tokens, enabling it to deal with sequences of as much as 128,000 tokens. Regardless of its smaller measurement, Phi-4-mini performs remarkably nicely in duties that require deep reasoning and language understanding. Considered one of its standout options is the potential for perform calling—permitting it to work together with exterior instruments and APIs, thus extending its sensible utility with out requiring a bigger, extra resource-intensive mannequin.

Each fashions have been optimized for on-device execution. This optimization is especially vital for functions in environments with restricted compute assets or in edge computing situations. The fashions’ lowered computational necessities make them a cheap alternative, making certain that superior AI functionalities might be deployed even on units that don’t have intensive processing capabilities.

Efficiency Insights and Benchmark Knowledge

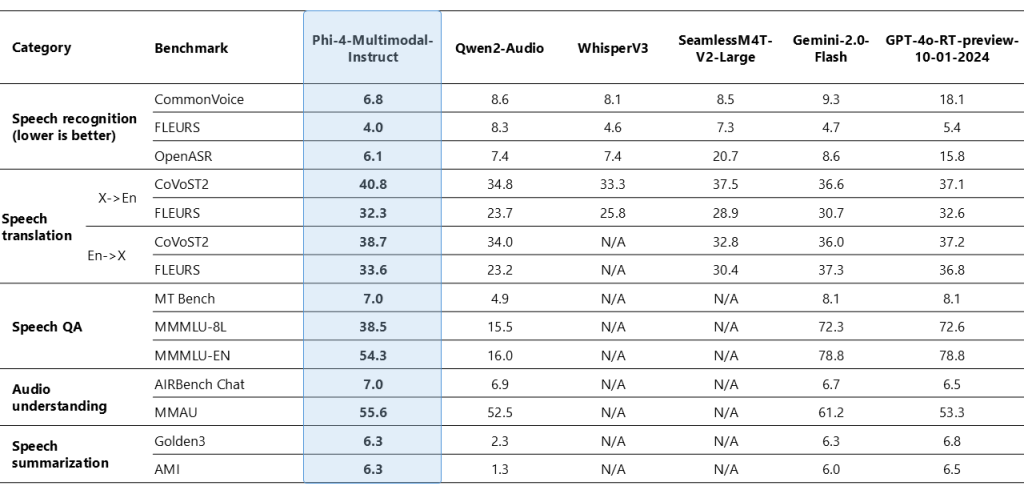

Benchmark outcomes present a transparent view of how these fashions carry out in sensible situations. For example, Phi-4-multimodal has demonstrated a formidable phrase error price (WER) of 6.14% in computerized speech recognition (ASR) duties. It is a modest enchancment over earlier fashions like WhisperV3, which reported a WER of 6.5%. Such enhancements are notably important in functions the place accuracy in speech recognition is important.

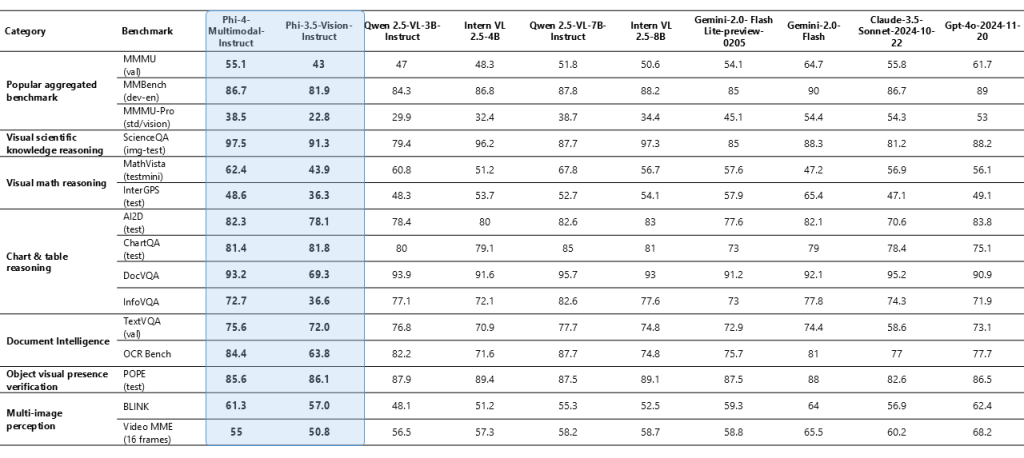

Past ASR, Phi-4-multimodal additionally reveals sturdy efficiency in duties corresponding to speech translation and summarization. Its means to course of visible inputs is notable in duties like doc reasoning, chart understanding, and optical character recognition (OCR). In a number of benchmarks—starting from artificial speech interpretation on visible information to doc evaluation—the mannequin’s efficiency constantly aligns with or exceeds that of bigger, extra resource-intensive fashions.

Equally, Phi-4-mini has been evaluated on a wide range of language benchmarks, the place it holds its personal regardless of its extra compact design. Its aptitude for reasoning, dealing with advanced mathematical issues, and coding duties underlines its versatility in text-based functions. The inclusion of a function-calling mechanism additional enriches its potential, enabling the mannequin to attract on exterior information and instruments seamlessly. These outcomes underscore a measured and considerate enchancment in multimodal and language processing capabilities, offering clear advantages with out overstating its efficiency.

Conclusion

The introduction of Phi-4-multimodal and Phi-4-mini by Microsoft marks an vital evolution within the subject of AI. Moderately than counting on cumbersome, resource-demanding architectures, these fashions provide a refined steadiness between effectivity and efficiency. By integrating a number of modalities in a single, cohesive framework, Phi-4-multimodal simplifies the complexity inherent in multimodal processing. In the meantime, Phi-4-mini supplies a strong resolution for text-intensive duties, proving that smaller fashions can certainly provide important capabilities.

Check out the Technical details and Model on Hugging Face. All credit score for this analysis goes to the researchers of this undertaking. Additionally, be at liberty to observe us on Twitter and don’t neglect to affix our 80k+ ML SubReddit.

🚨 Really helpful Learn- LG AI Analysis Releases NEXUS: An Superior System Integrating Agent AI System and Knowledge Compliance Requirements to Deal with Authorized Issues in AI Datasets

Aswin AK is a consulting intern at MarkTechPost. He’s pursuing his Twin Diploma on the Indian Institute of Know-how, Kharagpur. He’s enthusiastic about information science and machine studying, bringing a powerful educational background and hands-on expertise in fixing real-life cross-domain challenges.